Bayesian Brittleness in Elec. J. Stat.

The Electronic Journal of Statistics has published an article by Houman Owhadi, Clint Scovel, and myself on the brittle dependency of Bayesian posteriors as a function of the prior.

H. Owhadi, C. Scovel, and T. J. Sullivan. “Brittleness of Bayesian inference under finite information in a continuous world.” Electronic Journal of Statistics 9(1):1–79, 2015.

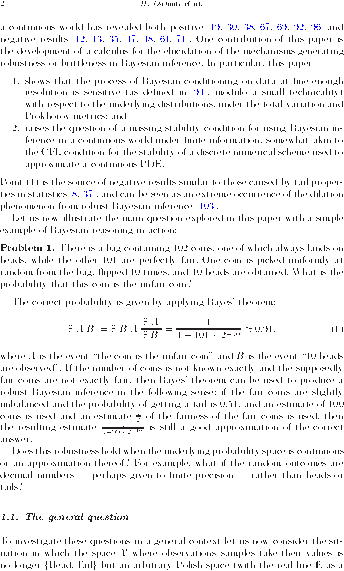

Abstract. We derive, in the classical framework of Bayesian sensitivity analysis, optimal lower and upper bounds on posterior values obtained from Bayesian models that exactly capture an arbitrarily large number of finite-dimensional marginals of the data-generating distribution and/or that are as close as desired to the data-generating distribution in the Prokhorov or total variation metrics; these bounds show that such models may still make the largest possible prediction error after conditioning on an arbitrarily large number of sample data measured at finite precision. These results are obtained through the development of a reduction calculus for optimization problems over measures on spaces of measures. We use this calculus to investigate the mechanisms that generate brittleness/robustness and, in particular, we observe that learning and robustness are antagonistic properties. It is now well understood that the numerical resolution of PDEs requires the satisfaction of specific stability conditions. Is there a missing stability condition for using Bayesian inference in a continuous world under finite information?

Published on Tuesday 3 February 2015 at 10:00 UTC #publication #elec-j-stat #ouq #bayesian #owhadi #scovel